Open Data: Climate

Analyzing Global Weather Measurement from the GSDD for the period 1929 – 2009

Objective

The Essentia data science team wanted to demonstrate how easy it is for any researcher to immediately gain value from the large pools of public climate data sets by using Essentia in the cloud.

In this example, they used the public dataset Daily Global Weather Measurements, 1929-2009 (NCDC, GSOD) available for free on Amazon Web Services. At a total data size of 20 gb, they estimated only a single Essentia instance would be necessary to perform all the necessary processing and analysis.

1. Connecting to the Data

First the team launched an m3 medium EC2 instance on AWS with the Essentia AMI installed.

The data was already publicly available on AWS as an EBS volume, so they just had to copy the snapshot to an EBS mounted to our EC2 instance, then transfer the files to an S3 bucket. From the Essentia UI, they added the S3 bucket as a data repository, and that’s it.

2. Exploring the Data

Using the data viewer features, they browsed the directory and files to see how many files they were dealing with and overall data size.

Then they made some exploratory data categories to get an idea about the structure and types of data. This was done without performing any ETL, or having to move data out of S3.

3. Preparing Data

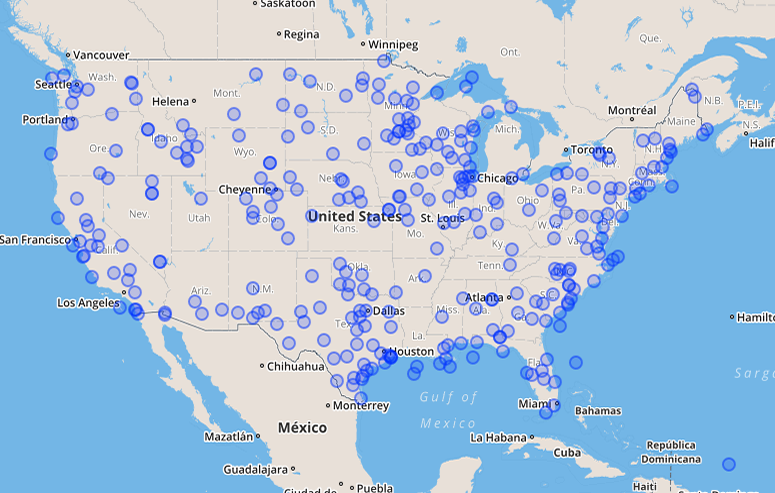

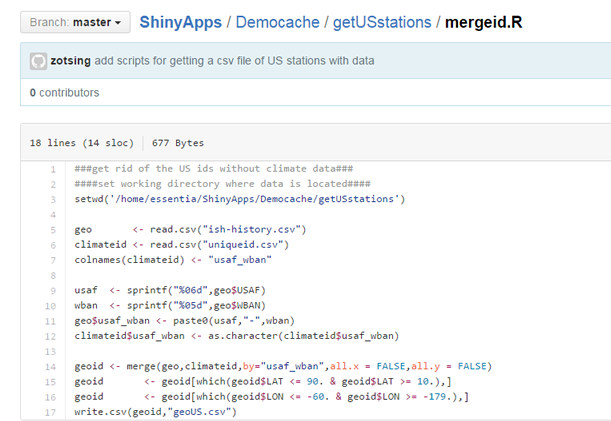

For their demo, they wanted to focus on US based weather stations.

Using the command line tools, they created a script that filtered out all non-us weather stations based on country code and then removed any stations that did not contain any data. Once the script is executed, all the target data is then loaded into Essentia’s in-memory DB.

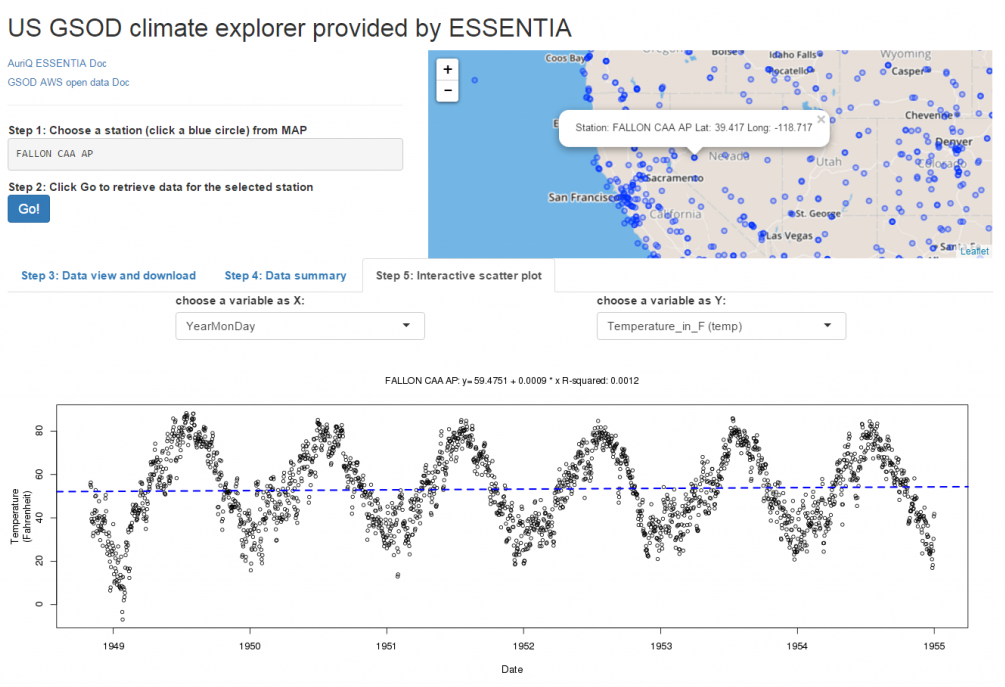

Analyzing the data in ” R “

Data was then streamed into an R dataframe for statistical analysis and then visualized through Shiny, as shown in the examples above, which has a variety of different visualization features including maps, charts, and plots.

This is just a simple example of what can be accomplished using Essentia. In addition, the data science team could have mashed up with other data sets or implemented some predictive or machine learning algorithms for future forecasting and modeling.

Find out more at https://github.com/auriq/ShinyApps for detailed instructions and script samples.